【技術解説】Virtual Sumo

Hi, I am an interaction engineer/designer MAO (@rainage) of The Designium.

In this article, I'm going to introduce the prototype project, "Virtual Sumo".

I would like to make a simple "Kamizumo" game with user's photo.

Tryout #1 : AutoPifu Sumo

In the beginning, I start to try the autoPifu to auto create the mesh of user. It is a very interesting project let you quickly make user's mesh to process the Pifu example.

However, there are some issues I need to solve for the game:

(1) the process of Pifu will pause the app

I fix the code with task and load the obj file after the process is done.

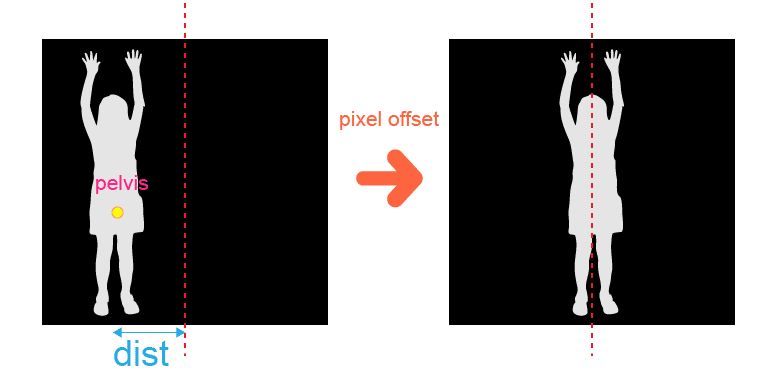

(2) the offset of generated mesh

The mesh of Pifu would be offset if the user doest not stand on the center of Kinect. So I need to offset the snapshot image align center by refer the user's pelvis position.

I fixed the script of AutoPifu and use GPU to make the texture offset.

/* Texture2D_Offset.compute */

#pragma kernel CSMain

int width;

int height;

int length;

int xOffset;

struct xColor {

float4 color;

};

StructuredBuffer<xColor> colors;

RWStructuredBuffer<xColor> out_colors;

RWTexture2D<float4> Result;

[numthreads(8, 8, 1)]

void CSMain(uint3 id : SV_DispatchThreadID)

{

int i = ((width * id.y) + id.x+xOffset) % length;

int idx = width * id.y + id.x;

Result[id.xy] = colors[i].color;

out_colors[idx] = colors[i];

}/* texture2D offset (C#) */

void GPU_Apply()

{

RenderTexture rTexture = new RenderTexture(width, height, 24);

rTexture.enableRandomWrite = true;

rTexture.Create();

texture = rTexture;

ComputeBuffer inputbuffer = new ComputeBuffer(xColorArray.Length, 16);

ComputeBuffer outputbuffer = new ComputeBuffer(xColorArray.Length, 16);

int k = shader.FindKernel("CSMain");

// wirte data into GPU

inputbuffer.SetData(xColorArray);

shader.SetBuffer(k, "colors", inputbuffer);

// set input

shader.SetInt("width", width);

shader.SetInt("height", height);

shader.SetInt("length", width * height);

shader.SetInt("xOffset", xOffset);

// set output

shader.SetTexture(k, "Result", texture);

shader.SetBuffer(k, "out_colors", outputbuffer);

// set dispatch

shader.Dispatch(k, width / 8 + 1, height / 8 + 1, 1);

// get output data

outputbuffer.GetData(xColorArray);

// release buffer

inputbuffer.Release();

outputbuffer.Release();

}

public struct xColor

{

public Vector4 color;

}(3) make the mesh of character could collide with each other

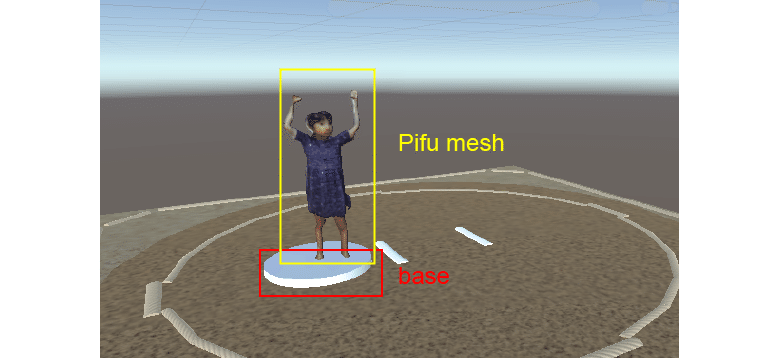

# let character could stand on the arena(土俵) and interactive with gravity.

To make the mesh of character easier to balance, I make a transparent base under the mesh and make this base as mesh's parent. So the gravity calculation between character and arena will be based on the base.

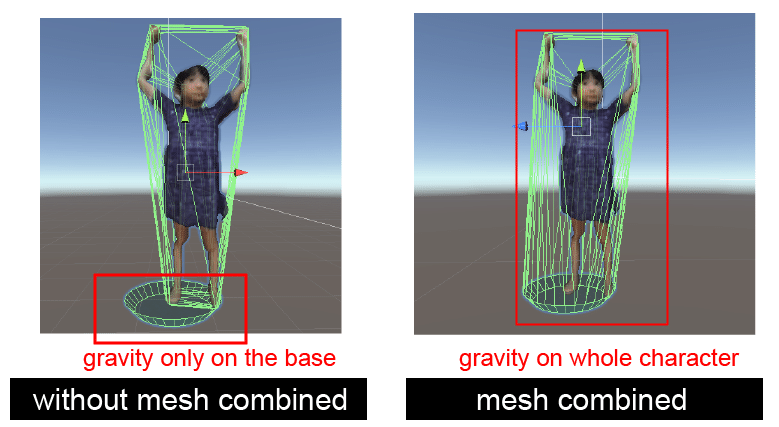

# Gravity of mesh

However, If I only use the mesh collision of the base as gravity calculation, It does not affect the character's gravity change between movements because of the character's shape.

So I need to combine the mesh of the base and the character by script, then using the combined mesh for gravity.

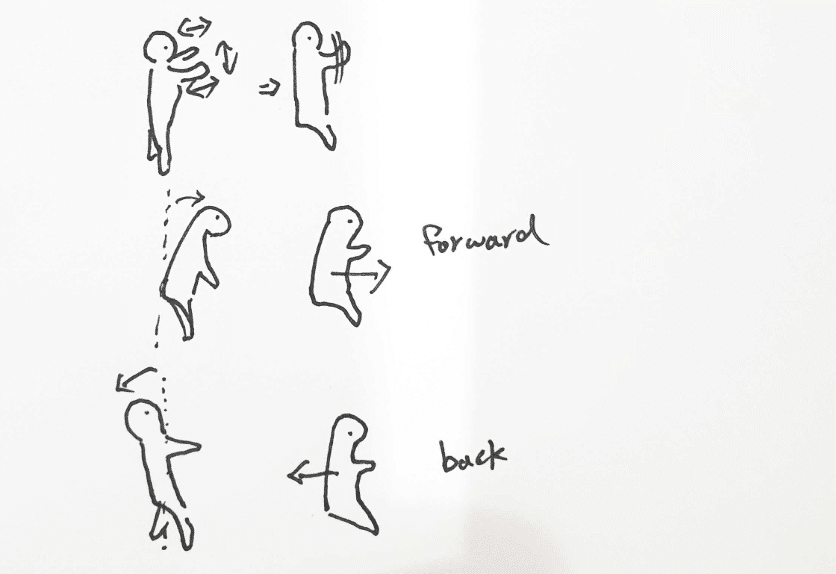

(4) make character move on the arena

To simulate tapping the paper to make the character move, I use the velocity of rigidbody based the taped position and set y direction for little jumping.

Rigidbody rb = gameObject.GetComponentInChildren<Rigidbody>();

Vector3 _rbPos = rb.worldCenterOfMass - arenaObj.transform.position;

Vector3 _dist = _hitpoint - _rbPos;

Vector3 _dir = _dist.normalized * 0.7f;

rb.velocity = new Vector3(_dir.x, 1f, _dir.z);Limitation of tryout 1 prototype

- It takes 20 sec to make an avatar. It is not good for real time interaction.

- Because the avatar is based the user's photo, it is wired if the shape of the avatar could not be changed.

Tryout2: Paper Sumo

[Unity + Azure Kinect Example for Unity asset]

In this experiment, I try to use the skeleton of azure Kinect to control the paper sumo as a fighting game.

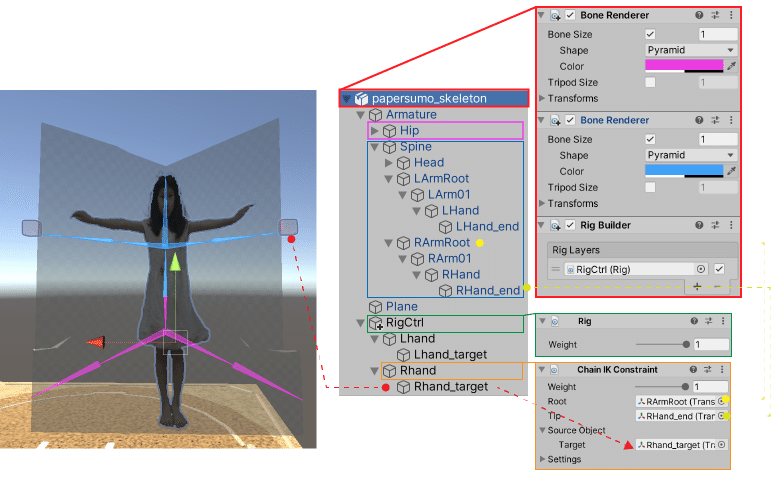

(1) Animation Rigging

I want make an animation from paper to sumo form, so I need to make a paper fold animation and let user to control the arm with both hand joints.

In this experiment, I try to use "Animation Rigging" as a IK tool.

The Animation Rigging could install from Package Manager preview packages. It is based on C# Jobs system could allow mix different animation real time.

- Bone Renderer

bone renderer is a useful tool for visualizing bone. You can mount different

bone renderers at the same time to present different parts of the bone.

- Chain IK

I select chain IK to control the paper arm, it only needs the root and tip point to set whole chain.

(2) Azure Kinect

- Gesture Mapping

I fixe the script of gesture detected script to allow each gesture could call an event, so I could easily set the mapping action on Editor.

public class GestureListener : MonoBehaviour, GestureListenerInterface

{

[System.Serializable]

public class GestureEvent

{

public GestureType gesture;

public UnityEvent gestureEvent;

}

public int playerIndex = 0;

public List<GestureEvent> detectGestures = new List<GestureEvent>();

...

public void UserDetected(ulong userId, int userIndex)

{

if (userIndex == playerIndex)

{

KinectGestureManager gestureManager = KinectManager.Instance.gestureManager;

foreach (GestureEvent gesture in detectGestures)

{

gestureManager.DetectGesture(userId, gesture.gesture);

}

}

}

}- User Shape Crop

Because I need to use the user shape image to the paper sumo. I check all joints of the user then get the bound box, then use the box size to copy the user image from renderer texture.

public void GetBodyBox(out Vector3 pmin, out Vector3 pmax)

{

Vector3 _ptmin = new Vector3(100, 100, 0), _ptmax = new Vector3(-100, -100, 0);

KinectManager kinectManager = KinectManager.Instance;

int jointsCount = kinectManager.GetJointCount();

for (int i = 0; i < jointsCount; i++)

{

if (joints[i] != null && joints[i].activeSelf)

{

if (joints[i].transform.position.x < _ptmin.x)

{

_ptmin.x = joints[i].transform.position.x;

_ptmin.z = joints[i].transform.position.z;

}

if (joints[i].transform.position.y < _ptmin.y)

{

_ptmin.y = joints[i].transform.position.y;

_ptmin.z = joints[i].transform.position.z;

}

if (joints[i].transform.position.x > _ptmax.x)

{

_ptmax.x = joints[i].transform.position.x;

_ptmax.z = joints[i].transform.position.z;

}

if (joints[i].transform.position.y > _ptmax.y)

{

_ptmax.y = joints[i].transform.position.y;

_ptmax.z = joints[i].transform.position.z;

}

}

}

pmin = _ptmin;

pmax = _ptmax;

}

...

void CropUserImage() {

Vector3 _pmin, _pmax, _smin, _smax;

userSkeleton.GetBodyBox(out _pmin, out _pmax);

_smin = CameraTool.WorldpointToScreeenPosition(userSkeleton.foregroundCamera, _pmin);

_smax = CameraTool.WorldpointToScreeenPosition(userSkeleton.foregroundCamera, _pmax);

int _w = Convert.ToInt32(_smax.x - _smin.x);

int _h = Convert.ToInt32(_smax.y - _smin.y);

Vector2 _center = new Vector2(_smin.x + _w / 2f, _smin.y + _h / 2f);

int sqr = 0;

if (_h >= _w) sqr = _h;

else sqr = _w;

rectBlob = new Rect(_center.x - sqr/2f, _center.y - sqr/2f, sqr, sqr);

rTex = bgRmManager.GetForegroundRTex();

RenderTexture.active = rTex;

Texture2D tex = new Texture2D(rectBlob.width, rectBlob.height);

tex.ReadPixels(new Rect(Screen.width - rectBlob.x - rectBlob.width, rectBlob.y, rectBlob.width, rectBlob.height), 0, 0);

tex.Apply();

RenderTexture.active = null;

}Limitation of tryout 2 prototype

Because the depth image shape of Azure Kinect, the user shape sometime will out of the depth sensor range. The quality of the user ’s whole-body image is better only in the middle of the screen.

編集後記

広報のマリコです!紙相撲懐かしい!!!小さい頃遊んだことを思い出しました。今回Maoが作っていたバーチャル相撲の1つ目 ”AutoPifu Sumo”は、土俵をトントンする昔ながらの紙相撲で、 2つ目の”Paper Sumo”も身体を動かすタイプも新しくて面白そうですよね。どちらも懐かしく思った人も多いのでは??うっすら見えるプレイヤーの娘ちゃん(多分)のカワイイ動きに萌えました。ちなみにMaoに「台湾にも紙相撲ってあったの?」と聞いてみたところ、小さい頃遊んだことがあったそう。日本のゲームは台湾でも人気なのでたくさんあるそうです!面白いゲームは世界共通ですね!!