stable-diffusion-webui+MangaLineExtraction_PyTorchで画像処理ソフトを使わずに線画を作成する

タイトル通りです。以下備忘録。

①Dynamic Promptsを導入したstable-diffusion-webuiでn枚以上ランダム生成

Prompt

((((monochrome, white_tone ,grayscale, line art)))), {portrait|digital art|anime screen cap|detailed illustration} of {white shirt and white pants 1girl | white sailor uniform 1girl | white blazer 1girl | white casualwear 1girl | white formalwear 1girl | white 1girl | white shirt and white pants 1boy| white uniform 1boy| white casualwear 1boy| white formalwear 1boy| white 1boy}, {standing|contrapposto|walking|running} on {mountain|river|forest|cave|lake|waterfall|castle|desert|park|garden|porch of a Japanese house|japanese-style room|shrine|temple|classroom|living|kitchen|bedroom|cafe|hospital|church|library|office|librarystreet|beach|pool|indoors|outdoors|cage|workshop|abandoned house|abandoned factory|penthouse|conservatory|staircase|tavern|medieval tavern|proscenium theater|arena|entrance hall|dance hall|japanese bar|concert hall|night club|diner|restaurant|Jewelry store|fashion boutiques|apparelshop|convenience store|supermarket|indoor pool|conference hall|shopping mall|prison|operating room|auction|Basement,crypt,cellar|Japanese hotel|bookstore|stage|casino|in the movie theatre|mixing_console|laboratory|mansion|jail bars|dungeon|pedestrian deck|terrace|amusement park|pastoral|tunnel|brick pavement|gazebo|water front|water garden|hedge|row of cherry trees|wheat field|paddy field|vegetation|subway station platform|train station platform|steps|festival|palace|rubble ruins|alleyway|frost covered trees|mediterranean|bamboo grove|jungle|underwater|nebula|Cyberpunk|white_background,simple_background}, looking at viewer, (((solo))), teen age, {0-1$$smile,|blush,|kind smile,|expression less,|happy,|sadness,} {0-1$$upper body,|full body,|cowboy shot,|face focus,} trending on pixiv, {0-2$$depth of fields |8k wallpaper|highly detailed |pov} beautiful face { |, from below|from above| from side| from behind| from back} <lora:test-noline:-3>negative

negativeXL_D sdxl-negprompt8-v1m unaestheticXL_AYv1 black_tone1024*1024にして他のパラメータはデフォルト。任意の枚数生成する

②①をベースにi2iのBatch処理+ControlNetで線画の元絵生成

Prompt欄に以下を追加

lora:test-flat:1

Input directory

C:\stable-diffusion-webui\outputs\txt2img-images\2023-09-24

Output directory

D:\desktop\line\lineart

Controlnet input directory

C:\stable-diffusion-webui\outputs\txt2img-images\2023-09-24

PNG info

PNG info directory

C:\stable-diffusion-webui\outputs\txt2img-images\2023-09-24

全チェックボックスをチェック

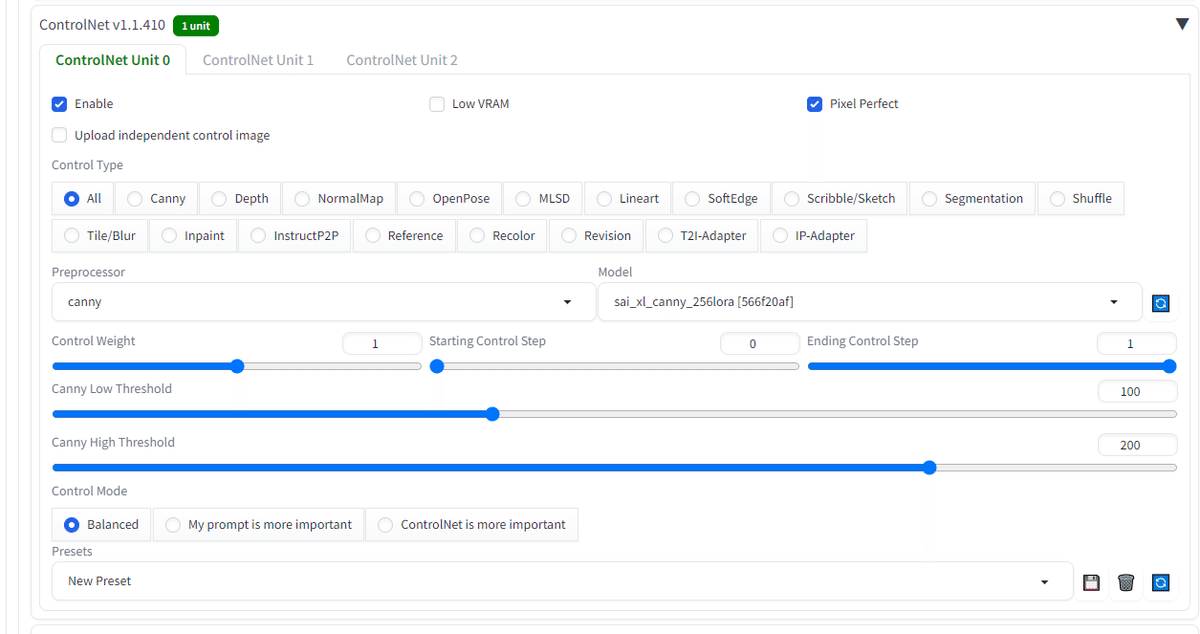

ControlNetのプリプロセッサはcanny、モデルはcontrollllite_v01032064e_sdxl_fake_scribble_anime [a9fe208a]ControlNetはsai_xl_canny_256lora [566f20af]が良さそう。

にすること。

画像サイズは1024*1024、Denoising strengthは1

すると線画がくっきりする

③②から線画をMangaLineExtraction_PyTorchで抽出する

Line.py

import os

import torch

import torch.nn as nn

from torch.utils.data.dataset import Dataset

from PIL import Image

import fnmatch

import cv2

import sys

import numpy as np

import gc

#torch.set_printoptions(precision=10)

class _bn_relu_conv(nn.Module):

def __init__(self, in_filters, nb_filters, fw, fh, subsample=1):

super(_bn_relu_conv, self).__init__()

self.model = nn.Sequential(

nn.BatchNorm2d(in_filters, eps=1e-3),

nn.LeakyReLU(0.2),

nn.Conv2d(in_filters, nb_filters, (fw, fh), stride=subsample, padding=(fw//2, fh//2), padding_mode='zeros')

)

def forward(self, x):

return self.model(x)

# the following are for debugs

print("****", np.max(x.cpu().numpy()), np.min(x.cpu().numpy()), np.mean(x.cpu().numpy()), np.std(x.cpu().numpy()), x.shape)

for i,layer in enumerate(self.model):

if i != 2:

x = layer(x)

else:

x = layer(x)

#x = nn.functional.pad(x, (1, 1, 1, 1), mode='constant', value=0)

print("____", np.max(x.cpu().numpy()), np.min(x.cpu().numpy()), np.mean(x.cpu().numpy()), np.std(x.cpu().numpy()), x.shape)

print(x[0])

return x

class _u_bn_relu_conv(nn.Module):

def __init__(self, in_filters, nb_filters, fw, fh, subsample=1):

super(_u_bn_relu_conv, self).__init__()

self.model = nn.Sequential(

nn.BatchNorm2d(in_filters, eps=1e-3),

nn.LeakyReLU(0.2),

nn.Conv2d(in_filters, nb_filters, (fw, fh), stride=subsample, padding=(fw//2, fh//2)),

nn.Upsample(scale_factor=2, mode='nearest')

)

def forward(self, x):

return self.model(x)

class _shortcut(nn.Module):

def __init__(self, in_filters, nb_filters, subsample=1):

super(_shortcut, self).__init__()

self.process = False

self.model = None

if in_filters != nb_filters or subsample != 1:

self.process = True

self.model = nn.Sequential(

nn.Conv2d(in_filters, nb_filters, (1, 1), stride=subsample)

)

def forward(self, x, y):

#print(x.size(), y.size(), self.process)

if self.process:

y0 = self.model(x)

#print("merge+", torch.max(y0+y), torch.min(y0+y),torch.mean(y0+y), torch.std(y0+y), y0.shape)

return y0 + y

else:

#print("merge", torch.max(x+y), torch.min(x+y),torch.mean(x+y), torch.std(x+y), y.shape)

return x + y

class _u_shortcut(nn.Module):

def __init__(self, in_filters, nb_filters, subsample):

super(_u_shortcut, self).__init__()

self.process = False

self.model = None

if in_filters != nb_filters:

self.process = True

self.model = nn.Sequential(

nn.Conv2d(in_filters, nb_filters, (1, 1), stride=subsample, padding_mode='zeros'),

nn.Upsample(scale_factor=2, mode='nearest')

)

def forward(self, x, y):

if self.process:

return self.model(x) + y

else:

return x + y

class basic_block(nn.Module):

def __init__(self, in_filters, nb_filters, init_subsample=1):

super(basic_block, self).__init__()

self.conv1 = _bn_relu_conv(in_filters, nb_filters, 3, 3, subsample=init_subsample)

self.residual = _bn_relu_conv(nb_filters, nb_filters, 3, 3)

self.shortcut = _shortcut(in_filters, nb_filters, subsample=init_subsample)

def forward(self, x):

x1 = self.conv1(x)

x2 = self.residual(x1)

return self.shortcut(x, x2)

class _u_basic_block(nn.Module):

def __init__(self, in_filters, nb_filters, init_subsample=1):

super(_u_basic_block, self).__init__()

self.conv1 = _u_bn_relu_conv(in_filters, nb_filters, 3, 3, subsample=init_subsample)

self.residual = _bn_relu_conv(nb_filters, nb_filters, 3, 3)

self.shortcut = _u_shortcut(in_filters, nb_filters, subsample=init_subsample)

def forward(self, x):

y = self.residual(self.conv1(x))

return self.shortcut(x, y)

class _residual_block(nn.Module):

def __init__(self, in_filters, nb_filters, repetitions, is_first_layer=False):

super(_residual_block, self).__init__()

layers = []

for i in range(repetitions):

init_subsample = 1

if i == repetitions - 1 and not is_first_layer:

init_subsample = 2

if i == 0:

l = basic_block(in_filters=in_filters, nb_filters=nb_filters, init_subsample=init_subsample)

else:

l = basic_block(in_filters=nb_filters, nb_filters=nb_filters, init_subsample=init_subsample)

layers.append(l)

self.model = nn.Sequential(*layers)

def forward(self, x):

return self.model(x)

class _upsampling_residual_block(nn.Module):

def __init__(self, in_filters, nb_filters, repetitions):

super(_upsampling_residual_block, self).__init__()

layers = []

for i in range(repetitions):

l = None

if i == 0:

l = _u_basic_block(in_filters=in_filters, nb_filters=nb_filters)#(input)

else:

l = basic_block(in_filters=nb_filters, nb_filters=nb_filters)#(input)

layers.append(l)

self.model = nn.Sequential(*layers)

def forward(self, x):

return self.model(x)

class res_skip(nn.Module):

def __init__(self):

super(res_skip, self).__init__()

self.block0 = _residual_block(in_filters=1, nb_filters=24, repetitions=2, is_first_layer=True)#(input)

self.block1 = _residual_block(in_filters=24, nb_filters=48, repetitions=3)#(block0)

self.block2 = _residual_block(in_filters=48, nb_filters=96, repetitions=5)#(block1)

self.block3 = _residual_block(in_filters=96, nb_filters=192, repetitions=7)#(block2)

self.block4 = _residual_block(in_filters=192, nb_filters=384, repetitions=12)#(block3)

self.block5 = _upsampling_residual_block(in_filters=384, nb_filters=192, repetitions=7)#(block4)

self.res1 = _shortcut(in_filters=192, nb_filters=192)#(block3, block5, subsample=(1,1))

self.block6 = _upsampling_residual_block(in_filters=192, nb_filters=96, repetitions=5)#(res1)

self.res2 = _shortcut(in_filters=96, nb_filters=96)#(block2, block6, subsample=(1,1))

self.block7 = _upsampling_residual_block(in_filters=96, nb_filters=48, repetitions=3)#(res2)

self.res3 = _shortcut(in_filters=48, nb_filters=48)#(block1, block7, subsample=(1,1))

self.block8 = _upsampling_residual_block(in_filters=48, nb_filters=24, repetitions=2)#(res3)

self.res4 = _shortcut(in_filters=24, nb_filters=24)#(block0,block8, subsample=(1,1))

self.block9 = _residual_block(in_filters=24, nb_filters=16, repetitions=2, is_first_layer=True)#(res4)

self.conv15 = _bn_relu_conv(in_filters=16, nb_filters=1, fh=1, fw=1, subsample=1)#(block7)

def forward(self, x):

x0 = self.block0(x)

x1 = self.block1(x0)

x2 = self.block2(x1)

x3 = self.block3(x2)

x4 = self.block4(x3)

x5 = self.block5(x4)

res1 = self.res1(x3, x5)

x6 = self.block6(res1)

res2 = self.res2(x2, x6)

x7 = self.block7(res2)

res3 = self.res3(x1, x7)

x8 = self.block8(res3)

res4 = self.res4(x0, x8)

x9 = self.block9(res4)

y = self.conv15(x9)

return y

class MyDataset(Dataset):

def __init__(self, image_paths, transform=None):

self.image_paths = image_paths

self.transform = transform

def get_class_label(self, image_name):

# your method here

head, tail = os.path.split(image_name)

#print(tail)

return tail

def __getitem__(self, index):

image_path = self.image_paths[index]

x = Image.open(image_path)

y = self.get_class_label(image_path.split('/')[-1])

if self.transform is not None:

x = self.transform(x)

return x, y

def __len__(self):

return len(self.image_paths)

def loadImages(folder):

imgs = []

matches = []

for root, dirnames, filenames in os.walk(folder):

for filename in fnmatch.filter(filenames, '*'):

matches.append(os.path.join(root, filename))

return matches

if __name__ == "__main__":

model = res_skip()

model.load_state_dict(torch.load("manga_line/erika.pth"))

is_cuda = torch.cuda.is_available()

if is_cuda:

model.cuda()

else:

model.cpu()

model.eval()

filelists = loadImages(sys.argv[1])

batch_size = 5

# Ensure the output directory exists

output_dir = sys.argv[2]

if not os.path.exists(output_dir):

os.makedirs(output_dir)

with torch.no_grad():

for i, imname in enumerate(filelists):

src = cv2.imread(imname, cv2.IMREAD_GRAYSCALE)

## 処理を軽くする為に短辺が1024になるようにリサイズ

#height, width = src.shape

#aspect_ratio = width / height

#if height < width:

# new_height = 1024

# new_width = int(new_height * aspect_ratio)

#else:

# new_width = 1024

# new_height = int(new_width / aspect_ratio)

#src = cv2.resize(src, (new_width, new_height))

rows = int(np.ceil(src.shape[0]/16))*16

cols = int(np.ceil(src.shape[1]/16))*16

# manually construct a batch. You can change it based on your usecases.

patch = np.ones((1, 1, rows, cols), dtype="float32")

patch[0, 0, 0:src.shape[0], 0:src.shape[1]] = src

if is_cuda:

tensor = torch.from_numpy(patch).cuda()

else:

tensor = torch.from_numpy(patch).cpu()

y = model(tensor)

print(imname, torch.max(y), torch.min(y))

yc = y.cpu().numpy()[0, 0, :, :]

yc[yc > 255] = 255

yc[yc < 0] = 0

#img = cv2.resize(yc[0:src.shape[0], 0:src.shape[1]], (width,height))

head, tail = os.path.split(imname)

cv2.imwrite(sys.argv[2] + "/" + tail.replace(".jpg", ".png"), yc[0:src.shape[0], 0:src.shape[1]])

if (i + 1) % batch_size == 0:

torch.cuda.empty_cache() # 5枚ごとにメモリを解放python Line.py D:\desktop\line\lineart D:\desktop\line\lineart2

できた線画をベースにcannyで色付き画像を生成してコピー機LoRAやcontrolllliteを作ったら楽しそう。

【追記】実際にやってみた

sdxl_gen_img.pyで生成する方法もあったが色んなControlNetを試したかったのでWebUIで生成することに決定。

となると困るのはPNG infoの情報。

WebUIで生成された画像はこのように文字情報を含んでいるのだが、微妙にprompt等を改変したい時もある。

なので生成された画像に含まれる文字情報を改変するスクリプトを組んでみた。

import os

import struct

import re

def read_chunk(file):

chunk_size_data = file.read(4)

if len(chunk_size_data) < 4:

return None, None, None, None # 追加: 4つのNoneを返すように変更

chunk_size = struct.unpack("!I", chunk_size_data)[0]

chunk_type = file.read(4).decode("utf-8")

chunk_data = file.read(chunk_size)

chunk_crc = file.read(4)

return chunk_size, chunk_type, chunk_data, chunk_crc # 追加: chunk_crcを返すように変更

def read_text_chunk(data):

parts = data.split(b'\x00')

if len(parts) >= 2:

return parts[1].decode("utf-8")

return None

def process_file(file_path, output_dir):

with open(file_path, "rb") as file:

signature = file.read(8)

if signature != b"\x89PNG\r\n\x1a\n":

print(f"{file_path} is not a valid PNG file.")

return

output_path = os.path.join(output_dir, os.path.basename(file_path))

with open(output_path, "wb") as out_file:

out_file.write(signature)

while True:

chunk_size, chunk_type, chunk_data, chunk_crc = read_chunk(file)

if chunk_size is None:

break

if chunk_type == "tEXt":

full_text = read_text_chunk(chunk_data)

# 不要な文字列を取り除く

full_text = re.sub(r'<lora:test-flat:1> \(+((monochrome, white_tone ,grayscale, line art))\)+, ?', '', full_text)

full_text = re.sub(r'<lora:test-noline:-3>', '', full_text)

full_text = re.sub(r'white ', '', full_text)

# 修正されたtEXtチャンクのデータを作成

modified_chunk_data = chunk_data.split(b'\x00')[0] + b'\x00' + full_text.encode("utf-8")

# 修正されたtEXtチャンクを書き込む

out_file.write(struct.pack("!I", len(modified_chunk_data)))

out_file.write(chunk_type.encode("utf-8"))

out_file.write(modified_chunk_data)

out_file.write(chunk_crc) # CRCの計算は省略

else:

# 他のチャンクは変更せずにそのまま書き込む

out_file.write(struct.pack("!I", chunk_size))

out_file.write(chunk_type.encode("utf-8"))

out_file.write(chunk_data)

out_file.write(chunk_crc)

def main(input_dir, output_dir):

for filename in os.listdir(input_dir):

if filename.lower().endswith(".png"):

full_path = os.path.join(input_dir, filename)

process_file(full_path, output_dir)

if __name__ == "__main__":

input_dir = "D:\\desktop\\line\\Base"

output_dir = "D:\\desktop\\line\\txt"

main(input_dir, output_dir)

新しく作成された画像を確認すると、

parameters

anime screen cap of 1boy, running on indoor pool, looking at viewer, (((solo))), teen age, trending on pixiv, beautiful face from side

Negative prompt: negativeXL_D sdxl-negprompt8-v1m unaestheticXL_AYv1

Steps: 20, Sampler: Euler a, CFG scale: 7.0, Seed: 2034594944, Size: 1024x1024, Model hash: 9a0157cad2, Model: CounterfeitXL-V1.0, Denoising strength: 1, ControlNet 0: "Module: canny, Model: t2i-adapter_diffusers_xl_canny [6b0c1490], Weight: 1, Resize Mode: Crop and Resize, Low Vram: False, Processor Res: 512, Threshold A: 100, Threshold B: 200, Guidance Start: 0, Guidance End: 1, Pixel Perfect: True, Control Mode: Balanced", TI hashes: "negativeXL_D: fff5d51ab655, sdxl-negprompt8-v1m: 24350b43a034, unaestheticXL_AYv1: 8a94b6725117, negativeXL_D: fff5d51ab655, sdxl-negprompt8-v1m: 24350b43a034, unaestheticXL_AYv1: 8a94b6725117", Version: v1.6.0

となっていたのでOK。

あとは

Input directory

D:\desktop\line\lineart(③で抽出した線画)

Output directory

D:\desktop\line\output(出力先)

Controlnet input directory

D:\desktop\line\lineart(③で抽出した線画)

PNG info

PNG info directory

D:\desktop\line\txt(↑で文字列情報を改変した画像)

全チェックボックスをチェック

ControlNetはsai_xl_canny_256lora [566f20af]が良さそう。

本当はフルカラーの画像を出力したかったのですが、Input directoryに引っ張られるのかグレスケになってしまいました。

若干残念ですがまぁグレスケにするプリプロセッサをかませればよいと考えましょう。

ちなみにちゃんと線画通りに画像が生成されているかチェックに使ったスクリプトをメモ。

LineCheck.py

from PIL import Image

import os

# ディレクトリのパス

LINEART_DIR = "D:\\desktop\\line\\lineart"

GRAYSCALE_DIR = "D:\\desktop\\line\\grayscale"

OUTPUT_DIR = "D:\\desktop\\line\\check"

# ディレクトリが存在しない場合は作成

if not os.path.exists(OUTPUT_DIR):

os.makedirs(OUTPUT_DIR)

# 画像ファイルのリストを取得

lineart_files = sorted([f for f in os.listdir(LINEART_DIR) if f.endswith(('.png', '.jpg', '.jpeg'))])

grayscale_files = sorted([f for f in os.listdir(GRAYSCALE_DIR) if f.endswith(('.png', '.jpg', '.jpeg'))])

# 同じ枚数の画像が格納されているか確認

if len(lineart_files) != len(grayscale_files):

raise ValueError("The number of images in both directories are not the same.")

for lineart_file, grayscale_file in zip(lineart_files, grayscale_files):

# 画像を開く

lineart = Image.open(os.path.join(LINEART_DIR, lineart_file)).convert("RGBA")

grayscale = Image.open(os.path.join(GRAYSCALE_DIR, grayscale_file))

datas = lineart.getdata()

new_data = []

for item in datas:

r, g, b, a = item

# 白背景部分を透明にする

if r > 240 and g > 240 and b > 240 and a > 240: # 閾値を少し調整して、完全な白だけでなく、ほぼ白も透明に

new_data.append((255, 255, 255, 0))

else:

new_data.append((255, 0, 255, a)) # ピンクに変換

lineart.putdata(new_data)

# 画像を重ねる

grayscale.paste(lineart, (0, 0), lineart)

# 保存

output_path = os.path.join(OUTPUT_DIR, os.path.splitext(lineart_file)[0] + ".png")

grayscale.save(output_path)

print("Process completed!")