A letter from Japanese animators to CG/AI researchers around the world. / Problems with using AI inJapanese anime. SIGGRAPH2024

This document reveals the challenges of utilizing generative AI in the Japanese anime production environment, as identified by a survey conducted by the Digital Content Association of Japan (DCAJ). By sharing the concerns felt by CG and AI researchers in the field, we aim to explore further development and application of these technologies.

3DCG

We conducted research and testing to use AI to solve production delays

caused by labor shortages at Japanese anime production sites. The results,

as of July 2024, are summarized below.

Challenges of using AI in anime production

We need AI that can maintain consistency within the same space and

understand the concept of camera angles.

Currently, it is possible to depict mecha and backgrounds as single

images, but what is needed is an AI that can output designs with

consistent shapes in three-dimensional views.The more information an AI has to interpret from an input image, the less

consistency there tends to be from different angles.I want to separate the background materials and layers according to the

depth of the 2D picture. This is for the purpose of character placement

and adding lens bokeh in compositing. Currently, it is possible to generate

a single picture, but it would be better to separate the materials and draw

the back of each layer in detail. At the current stage, Photoshop's

functions seem optimal, but even so, the boundaries are unclear and it

cannot be said to be practical.The animation process requires AI that can faithfully reproduce the design

decided in the design process. Technologies that generate movement

from a single still image, such as AnimateAnyone and MimicMotion,

generate something that is similar but not the same, and do not produce

appropriate results when it comes to anime designs.

The image below shows a standard workflow for anime production, along with steps that are prone to delays due to labor shortages, and areas where we hope to use AI to improve efficiency in the future.

■Character Design

Investigating:

💡 If we could create a clean rough draft and generate a stock image with

anti-aliasing disabled, it would be easier to select the colors to be

painted, and the efficiency of color design would improve. However,

because anti-aliasing is currently enabled, the selection process is

difficult. To solve this problem, we are considering creating a control net

to control anti-aliasing.

■Mechanic Design

AI-based Workflow

💡 Currently, it is difficult to output images with consistent shapes in three-

dimensional drawings.

■ Background design

Normal Workflow

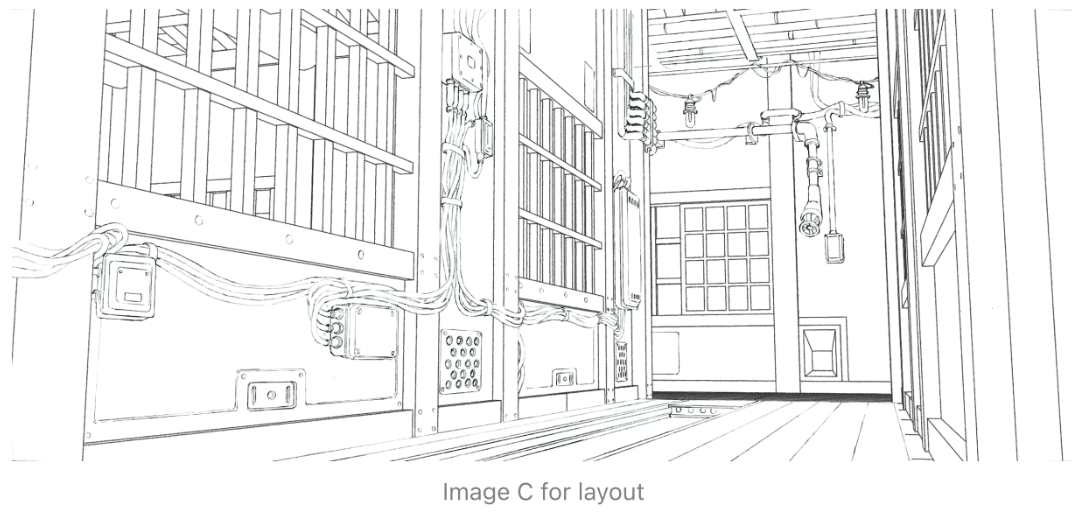

layout

Background drawn with pencil

Marking indicating the light source

Finish

AI-powered workflow

💡 Currently, it is possible to create a single image, but if you try to generate

images from different camera angles within the same space, it is not

possible to achieve a design that maintains spatial consistency.

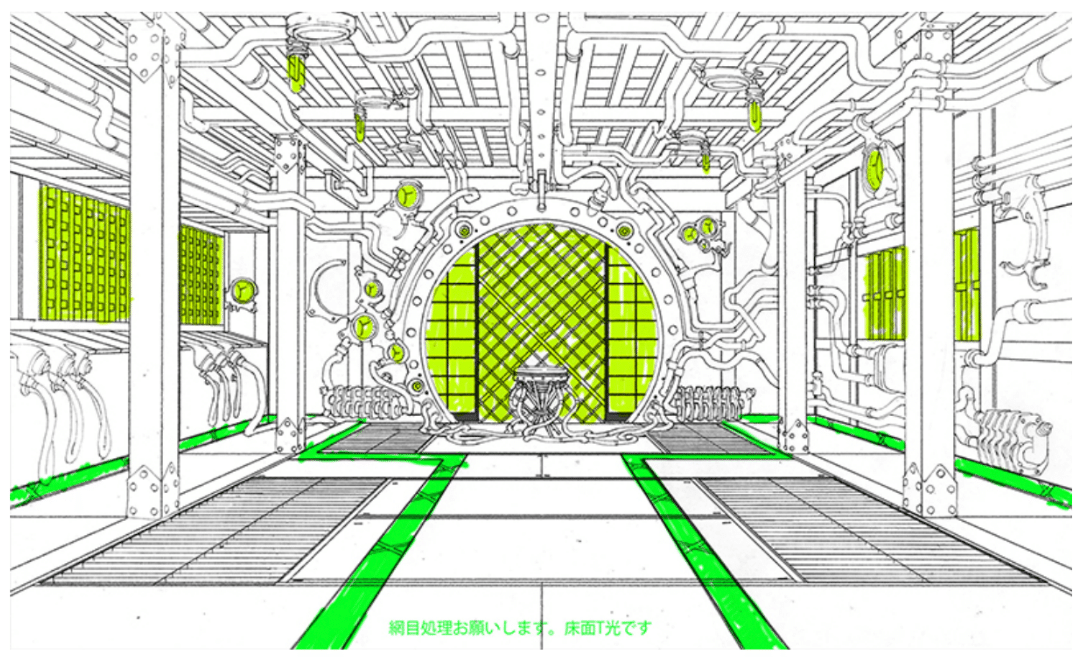

When trying to use AI to polish an input image created from a rough 3D model, differences arise, such as the areas marked in green in the image below.

Use the following style to output images from different angles in the same space.

💡 The input images show different angles of the same space, but the output

from the AI shows inconsistencies in the shapes and colors of parts that

should be identical.

AI-based background creation

We tried to create a background using AI, using the style of this image.

In the Low model state, I left it to the AI to polish it up.

For details, the hand-drawn details are input into the AI.

💡 They are trying to reduce the cost of creating backgrounds by using AI,

but at present it is expected that better results will be obtained by

creating low-polygon models and adding them by hand.

As for coloring, it is not suitable for scenes where the camera angle

changes within the same space, because the consistency of the space

cannot be maintained when the camera angle changes.

■Character Animation

💡 We are currently experimenting with various methods to generate

animations based on a specified character design, but have not found a

satisfactory method at this time. Ideally, we would like to input images taken from multiple angles, such as the front, side, back, and close-up of the face, based on the three-dimensional drawing created during the character design stage, and have the AI comprehensively understand the characteristics of the character.

💡 Currently, LoRA is considered to be an effective method for fixing the

appearance of a character to a specified design. However, there are

problems with it, such as the lack of continuity of movement, so we are

continuing to investigate various other methods.