Azure Form Recognizerでドキュメントファイルからテキストを抽出する

こんにちわ、@_mkazutaka です。社内データを取り込んだChatGPTなどを構築する場合、一般的にはデータを保存するプログラムとデータを読み取る処理の2つが必要です。

データを保存するためには、まずドキュメントファイルからテキストを抽出する必要があります。LangChainにはドキュメントファイルからテキストを抽出するために以下のツールを使った方法が説明されています(リンク)。

PyPDF

MathPix

Unstructured

etc..

同様にAzureにもドキュメントファイルからテキストを抽出するサービス「Azure Form Recognizer」が提供されています。今回は、こちらのサービスを使って、ドキュメントファイルからテキストを抽出してみた結果を紹介します。

ドキュメントを抽出する

参考データは、LangChain内でも使われている`layout-parser-paper.pdf`を使います。せっかくなので、pypdfを使ったデータと比較してみます。

pypdfを使ったコード

pypdfを使って、PDFファイルを読み取るコードがこちらです。

from pypdf import PdfReader

filename = "./data/layout-parser-paper.pdf"

page_map = []

reader = PdfReader(filename)

pages = reader.pages

for page_num, p in enumerate(pages):

page_text = p.extract_text()

page_map.append((page_num, offset, page_text))

このコードは、Pythonのpypdfライブラリを使用してPDFファイルを読み込み、各ページのテキストを抽出し、その情報をpage_mapリストに追加します。

具体的には、まずPdfReaderクラスを使って指定されたファイル(layout-parser-paper.pdf)を開きます。次に、reader.pagesを使ってPDFのすべてのページにアクセスします。

forループを使って各ページに順番にアクセスし、enumerate()関数を使ってページの番号を取得します(page_num)。p.extract_text()を使ってそのページのテキストを抽出し、page_textという変数に格納します。

最後に、(page_num, offset, page_text)のタプルをpage_mapリストに追加します。このタプルは、ページ番号、オフセット(コード内には未定義)、および抽出されたテキストを含んでいます。

つまり、このコードは指定されたPDFファイルからテキストを抽出し、各ページの情報をpage_mapに保存するものです。

Azure Form Recognizerを使う

AzureFormRecognizerを使ったコードがこちらです。読み取った表をHTMLの表として変換するコードが入っているため、少し複雑になっています。(参考コードはこちら)

from azure.ai.formrecognizer import DocumentAnalysisClient

from azure.core.credentials import AzureKeyCredential

import html

filename = "./data/layout-parser-paper.pdf"

FORM_RECOGNIZER_SERVICE = "INPUT YOUR FORM RECOGNIZER SERVICE"

AZURE_FORM_RECOGNIZER_KEY = "INPUT YOUR FORM RECOGNIZER KEY"

AZURE_CREDENTIAL = AzureKeyCredential(AZURE_FORM_RECOGNIZER_KEY)

def table_to_html(table):

table_html = "<table>"

rows = [sorted([cell for cell in table.cells if cell.row_index == i], key=lambda cell: cell.column_index) for i in

range(table.row_count)]

for row_cells in rows:

table_html += "<tr>"

for cell in row_cells:

tag = "th" if (cell.kind == "columnHeader" or cell.kind == "rowHeader") else "td"

cell_spans = ""

if cell.column_span > 1: cell_spans += f" colSpan={cell.column_span}"

if cell.row_span > 1: cell_spans += f" rowSpan={cell.row_span}"

table_html += f"<{tag}{cell_spans}>{html.escape(cell.content)}</{tag}>"

table_html += "</tr>"

table_html += "</table>"

return table_html

page_map = []

form_recognizer_client = DocumentAnalysisClient(

endpoint=f"https://{FORM_RECOGNIZER_SERVICE}.cognitiveservices.azure.com/",

credential=AZURE_CREDENTIAL,

)

with open(filename, "rb") as f:

poller = form_recognizer_client.begin_analyze_document("prebuilt-layout", document=f)

form_recognizer_results = poller.result()

for page_num, page in enumerate(form_recognizer_results.pages):

tables_on_page = [table for table in form_recognizer_results.tables if

table.bounding_regions[0].page_number == page_num + 1]

# mark all positions of the table spans in the page

page_offset = page.spans[0].offset

page_length = page.spans[0].length

table_chars = [-1] * page_length

for table_id, table in enumerate(tables_on_page):

for span in table.spans:

# replace all table spans with "table_id" in table_chars array

for i in range(span.length):

idx = span.offset - page_offset + i

if idx >= 0 and idx < page_length:

table_chars[idx] = table_id

# build page text by replacing charcters in table spans with table html

page_text = ""

added_tables = set()

for idx, table_id in enumerate(table_chars):

if table_id == -1:

page_text += form_recognizer_results.content[page_offset + idx]

elif not table_id in added_tables:

page_text += table_to_html(tables_on_page[table_id])

added_tables.add(table_id)

page_text += " "

page_map.append((page_num, page_text))このコードは、AzureのForm Recognizerサービスを使用して、指定されたPDFファイルからテーブルデータを抽出し、HTML形式の表に変換してpage_mapリストに保存します。

具体的には、まず必要なライブラリをインポートします。次に、AzureのForm Recognizerサービスに接続するためのエンドポイントや認証情報を設定します。

table_to_html()関数は、テーブルデータを受け取り、HTML形式の表に変換します。

page_mapリストは、各ページの情報を保存するための空のリストです。

DocumentAnalysisClientを使用してForm Recognizerクライアントを作成し、指定されたPDFファイルをバイナリ形式で開きます。

begin_analyze_document()メソッドを使用してドキュメントの解析を開始し、結果を取得します。

form_recognizer_results.pagesを使用して各ページに順番にアクセスし、テーブルが存在する場合にはそれを取得します。

テーブルの位置を特定し、テーブルのスパン(範囲)内の文字をテーブルIDで置き換えます。

テーブルのスパン内の文字をテーブルのHTML形式に変換して、ページのテキストに組み込みます。

最後に、(page_num, page_text)のタプルをpage_mapリストに追加します。

つまり、このコードは指定されたPDFファイルからテーブルデータを抽出し、各ページのテキストと変換されたHTML表をpage_mapに保存するものです。

結果を比較する(パート1)

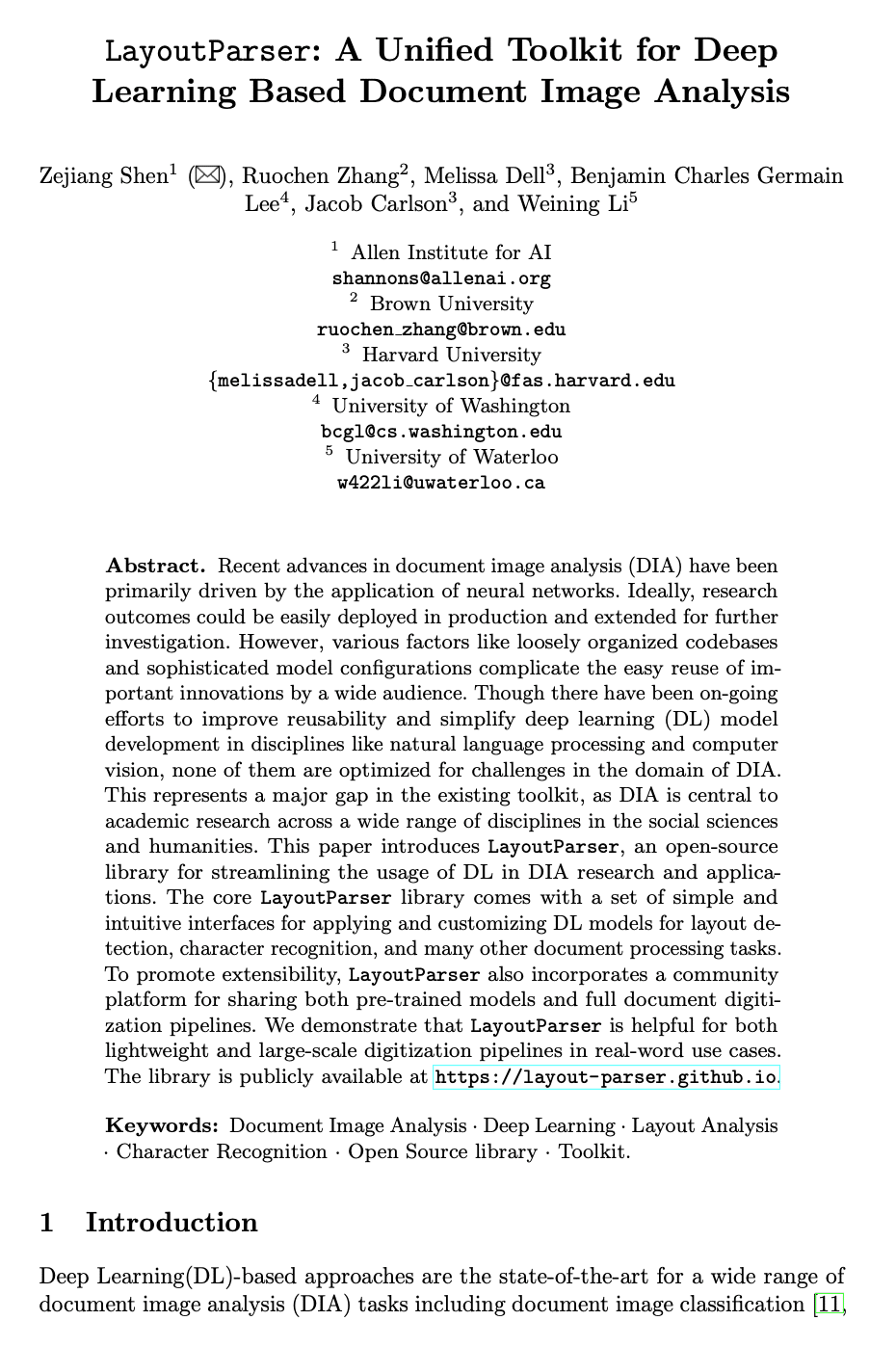

出来上がった`page_map[0]`の結果の比較です。上がpypdf、下がAzureFormRecognizerになっています。

pypdfだと、「Unified」の文字が 「Uni\x0ced」になっています。その他は特に気になる違いはなさそうですね。

(0, 'LayoutParser : A Uni\x0ced Toolkit for Deep\nLearning Based Document Image Analysis\nZejiang Shen1( \x00), Ruochen Zhang2, Melissa Dell3, Benjamin Charles Germain\nLee4, Jacob Carlson3, and Weining Li5\n1Allen Institute for AI\nshannons@allenai.org\n2Brown University\nruochen zhang@brown.edu\n3Harvard University\nfmelissadell,jacob carlson g@fas.harvard.edu\n4University of Washington\nbcgl@cs.washington.edu\n5University of Waterloo\nw422li@uwaterloo.ca\nAbstract. Recent advances in document image analysis (DIA) have been\nprimarily driven by the application of neural networks. Ideally, research\noutcomes could be easily deployed in production and extended for further\ninvestigation. However, various factors like loosely organized codebases\nand sophisticated model con\x0cgurations complicate the easy reuse of im-\nportant innovations by a wide audience. Though there have been on-going\ne\x0borts to improve reusability and simplify deep learning (DL) model\ndevelopment in disciplines like natural language processing and computer\nvision, none of them are optimized for challenges in the domain of DIA.\nThis represents a major gap in the existing toolkit, as DIA is central to\nacademic research across a wide range of disciplines in the social sciences\nand humanities. This paper introduces LayoutParser , an open-source\nlibrary for streamlining the usage of DL in DIA research and applica-\ntions. The core LayoutParser library comes with a set of simple and\nintuitive interfaces for applying and customizing DL models for layout de-\ntection, character recognition, and many other document processing tasks.\nTo promote extensibility, LayoutParser also incorporates a community\nplatform for sharing both pre-trained models and full document digiti-\nzation pipelines. We demonstrate that LayoutParser is helpful for both\nlightweight and large-scale digitization pipelines in real-word use cases.\nThe library is publicly available at https://layout-parser.github.io .\nKeywords: Document Image Analysis ·Deep Learning ·Layout Analysis\n·Character Recognition ·Open Source library ·Toolkit.\n1 Introduction\nDeep Learning(DL)-based approaches are the state-of-the-art for a wide range of\ndocument image analysis (DIA) tasks including document image classi\x0ccation [ 11,arXiv:2103.15348v2 [cs.CV] 21 Jun 2021')(0, 0, 'arXiv:2103.15348v2 [cs.CV] 21 Jun 2021\nLayoutParser: A Unified Toolkit for Deep Learning Based Document Image Analysis\nZejiang Shen 1\n( ), Ruochen Zhang 2 ,\nMelissa Dell\n3 , Benjamin Charles Germain Lee\n4 Jacob Carlson\n3 , and Weining Li 1 Allen Institute for AI shannons@allenai.org 2\n5\n,\nBrown University ruochen zhang@brown.edu 3 {melissadell,jacob carlson 4\nHarvard University }@fas.harvard.edu University of Washington bcgl@cs.washington.edu 5 University of Waterloo w422li@uwaterloo.ca\nAbstract. Recent advances in document image analysis (DIA) have been primarily driven by the application of neural networks. Ideally, research outcomes could be easily deployed in production and extended for further investigation. However, various factors like loosely organized codebases and sophisticated model configurations complicate the easy reuse of im- portant innovations by a wide audience. Though there have been on-going efforts to improve reusability and simplify deep learning (DL) model development in disciplines like natural language processing and computer vision, none of them are optimized for challenges in the domain of DIA. This represents a major gap in the existing toolkit, as DIA is central to academic research across a wide range of disciplines in the social sciences and humanities. This paper introduces LayoutParser, an open-source library for streamlining the usage of DL in DIA research and applica- tions. The core LayoutParser library comes with a set of simple and intuitive interfaces for applying and customizing DL models for layout de- tection, character recognition, and many other document processing tasks. To promote extensibility, LayoutParser also incorporates a community platform for sharing both pre-trained models and full document digiti- zation pipelines. We demonstrate that LayoutParser is helpful for both lightweight and large-scale digitization pipelines in real-word use cases. The library is publicly available at https://layout-parser.github.io\n.\nKeywords: Document Image Analysis\n· Deep Learning · Layout Analysis Character Recognition ·\n· Open Source library · Toolkit.\n1 Introduction\nDeep Learning(DL)-based approaches are the state-of-the-art for a wide range of document image analysis (DIA) tasks including document image classification [11 , :selected: :selected: :selected: :selected: :unselected: :selected: ')

結果を比較する(パート2)

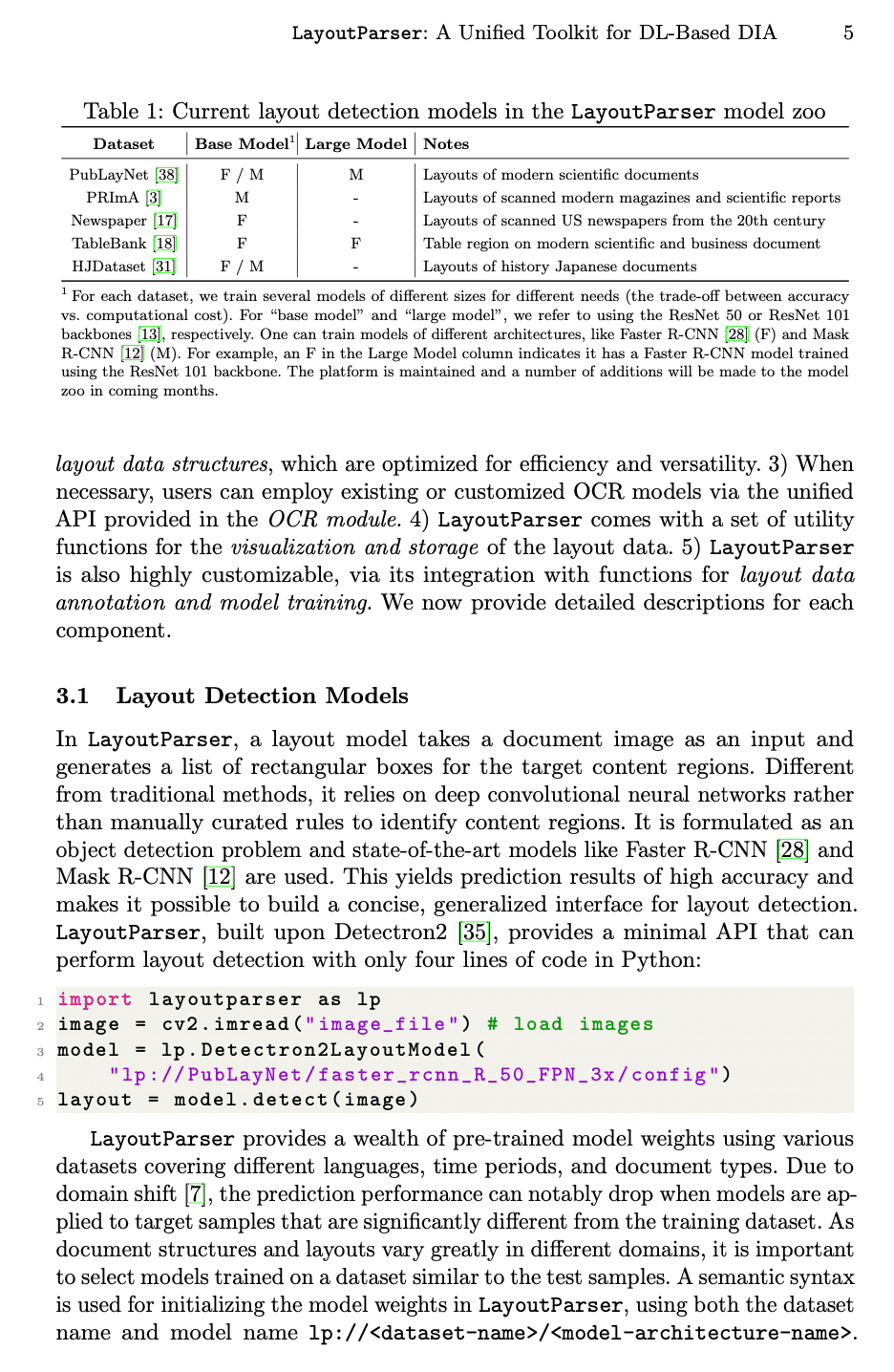

出来上がった`page_map[4]`の結果の比較です。上がpypdf、下がAzureFormRecognizerになっています。

大きな違いとしては、PDF上でTableとなっている部分がAzureFormRecognizerだときちんとHTMLのテーブル形式になっている点ですね。テーブルの読み込みができるようになっています。

(4, 'LayoutParser : A Uni\x0ced Toolkit for DL-Based DIA 5\nTable 1: Current layout detection models in the LayoutParser model zoo\nDataset Base Model1Large Model Notes\nPubLayNet [38] F / M M Layouts of modern scienti\x0cc documents\nPRImA [3] M - Layouts of scanned modern magazines and scienti\x0cc reports\nNewspaper [17] F - Layouts of scanned US newspapers from the 20th century\nTableBank [18] F F Table region on modern scienti\x0cc and business document\nHJDataset [31] F / M - Layouts of history Japanese documents\n1For each dataset, we train several models of di\x0berent sizes for di\x0berent needs (the trade-o\x0b between accuracy\nvs. computational cost). For \\base model" and \\large model", we refer to using the ResNet 50 or ResNet 101\nbackbones [ 13], respectively. One can train models of di\x0berent architectures, like Faster R-CNN [ 28] (F) and Mask\nR-CNN [ 12] (M). For example, an F in the Large Model column indicates it has a Faster R-CNN model trained\nusing the ResNet 101 backbone. The platform is maintained and a number of additions will be made to the model\nzoo in coming months.\nlayout data structures , which are optimized for e\x0eciency and versatility. 3) When\nnecessary, users can employ existing or customized OCR models via the uni\x0ced\nAPI provided in the OCR module . 4)LayoutParser comes with a set of utility\nfunctions for the visualization and storage of the layout data. 5) LayoutParser\nis also highly customizable, via its integration with functions for layout data\nannotation and model training . We now provide detailed descriptions for each\ncomponent.\n3.1 Layout Detection Models\nInLayoutParser , a layout model takes a document image as an input and\ngenerates a list of rectangular boxes for the target content regions. Di\x0berent\nfrom traditional methods, it relies on deep convolutional neural networks rather\nthan manually curated rules to identify content regions. It is formulated as an\nobject detection problem and state-of-the-art models like Faster R-CNN [ 28] and\nMask R-CNN [ 12] are used. This yields prediction results of high accuracy and\nmakes it possible to build a concise, generalized interface for layout detection.\nLayoutParser , built upon Detectron2 [ 35], provides a minimal API that can\nperform layout detection with only four lines of code in Python:\n1import layoutparser as lp\n2image = cv2. imread (" image_file ") # load images\n3model = lp. Detectron2LayoutModel (\n4 "lp :// PubLayNet / faster_rcnn_R_50_FPN_3x / config ")\n5layout = model . detect ( image )\nLayoutParser provides a wealth of pre-trained model weights using various\ndatasets covering di\x0berent languages, time periods, and document types. Due to\ndomain shift [ 7], the prediction performance can notably drop when models are ap-\nplied to target samples that are signi\x0ccantly di\x0berent from the training dataset. As\ndocument structures and layouts vary greatly in di\x0berent domains, it is important\nto select models trained on a dataset similar to the test samples. A semantic syntax\nis used for initializing the model weights in LayoutParser , using both the dataset\nname and model name lp://<dataset-name>/<model-architecture-name> .')(4, 'LayoutParser: A Unified Toolkit for DL-Based DIA\n5\nTable 1: Current layout detection models in the LayoutParser model zoo\n<table><tr><th>Dataset</th><th>Base Model1</th><th>Large Model</th><th>Notes</th></tr><tr><td>PubLayNet [38]</td><td>F / M</td><td>M</td><td>Layouts of modern scientific documents</td></tr><tr><td>PRImA [3]</td><td>M</td><td>-</td><td>Layouts of scanned modern magazines and scientific reports</td></tr><tr><td>Newspaper [17]</td><td>F</td><td>-</td><td>Layouts of scanned US newspapers from the 20th century</td></tr><tr><td>TableBank [18]</td><td>F</td><td>F</td><td>Table region on modern scientific and business document</td></tr><tr><td>HJDataset [31]</td><td>F / M</td><td>-</td><td>Layouts of history Japanese documents</td></tr></table>\n1 For each dataset, we train several models of different sizes for different needs (the trade-off between accuracy vs. computational cost). For “base model” and “large model”, we refer to using the ResNet 50 or ResNet 101 backbones [13], respectively. One can train models of different architectures, like Faster R-CNN [28] (F) and Mask R-CNN [12] (M). For example, an F in the Large Model column indicates it has a Faster R-CNN model trained using the ResNet 101 backbone. The platform is maintained and a number of additions will be made to the model zoo in coming months.\nlayout data structures, which are optimized for efficiency and versatility. 3) When necessary, users can employ existing or customized OCR models via the unified API provided in the OCR module. 4) LayoutParser comes with a set of utility functions for the visualization and storage of the layout data. 5) LayoutParser is also highly customizable, via its integration with functions for layout data annotation and model training. We now provide detailed descriptions for each component.\n3.1 Layout Detection Models\nIn LayoutParser, a layout model takes a document image as an input and generates a list of rectangular boxes for the target content regions. Different from traditional methods, it relies on deep convolutional neural networks rather than manually curated rules to identify content regions. It is formulated as an object detection problem and state-of-the-art models like Faster R-CNN [28] and Mask R-CNN [12] are used. This yields prediction results of high accuracy and makes it possible to build a concise, generalized interface for layout detection. LayoutParser, built upon Detectron2 [35], provides a minimal API that can perform layout detection with only four lines of code in Python:\n1 import layoutparser as lp\n2 image = cv2 . imread (" image_file ") # load images 3 model = lp . Detectron2LayoutModel ( 4 "lp :// PubLayNet / faster_rcnn_R_50_FPN_3x / config ")\n5 layout = model . detect ( image )\nLayoutParser provides a wealth of pre-trained model weights using various datasets covering different languages, time periods, and document types. Due to domain shift [7], the prediction performance can notably drop when models are ap- plied to target samples that are significantly different from the training dataset. As document structures and layouts vary greatly in different domains, it is important to select models trained on a dataset similar to the test samples. A semantic syntax is used for initializing the model weights in LayoutParser, using both the dataset\nand model name lp://<dataset-name>/<model-architecture-name>. name ')

まとめ

Azure Form Recognizerを使うと、ドキュメントファイルからテーブルを読み取ることができました。ChatGPTと連携する際にテーブルを渡せるので表を読み込ませることができそうですね。エクセルとかをPDFにした場合でも読み取れるか試してみたくなりますね。

もしかしたらpypdfでもテーブルを読み取れるかもしれないので、読み取る方法を知っている方がいれば教えていただけると幸いです!

宣伝

@_mkazutakaでTwitterやってます。ぜひぜひ情報共有がてらフォローしてもらえると幸いです。

カジュアル面談も随時募集しているので、よかったらご応募くださいー!